Atlas, the OpenAI browser it’s incorporating ChatGPT, or a browser where ChatGPT is the default interface. It can complete the tasks without always redirecting you to a URL.

Most of you might have thought that all it means is just fewer clicks, but the real story is much bigger than that.

Many think it is just another browser that finds results. It’s not about what it does; instead, it’s about how it does what it does is going to change the SERP game.

ChatGPT itself is a search engine; it runs queries, scrapes the internet based on user queries, summarizes the results, and presents them to you.

With “Atlas,” it’s different, not just Atlas; the Google AI search is also similar to this. These browsers are like agents; they can interact with your JavaScript (JS), which is being used to render your front end.

This means that the SERP game is going to change. If your site has bad JavaScript or is slow, then you won’t appear.

I used a similar example, which is discussed in the last post: OpenAI Apps SDK: The App store of ChatGPT?

When someone searches for

“Find me the new version of adidas black adizero evo shoes size 10.5 that can be delivered by EOD tomorrow”

and searched on Atlas and normal Google Search (Not the Google AI search). When I looked at the networks tab, I found something fundamentally different that sparked the thought about the change that is going to happen in SERP

This is where “ How it does what it does” makes the difference:” It’s immediately rendering all the JS like how a human sees it.

I am not saying Google Search is not rendering the JS for SERP results. Instead, it means

“It is done behind the scenes” VS. “this is live, like the same rendered content like how humans see.”

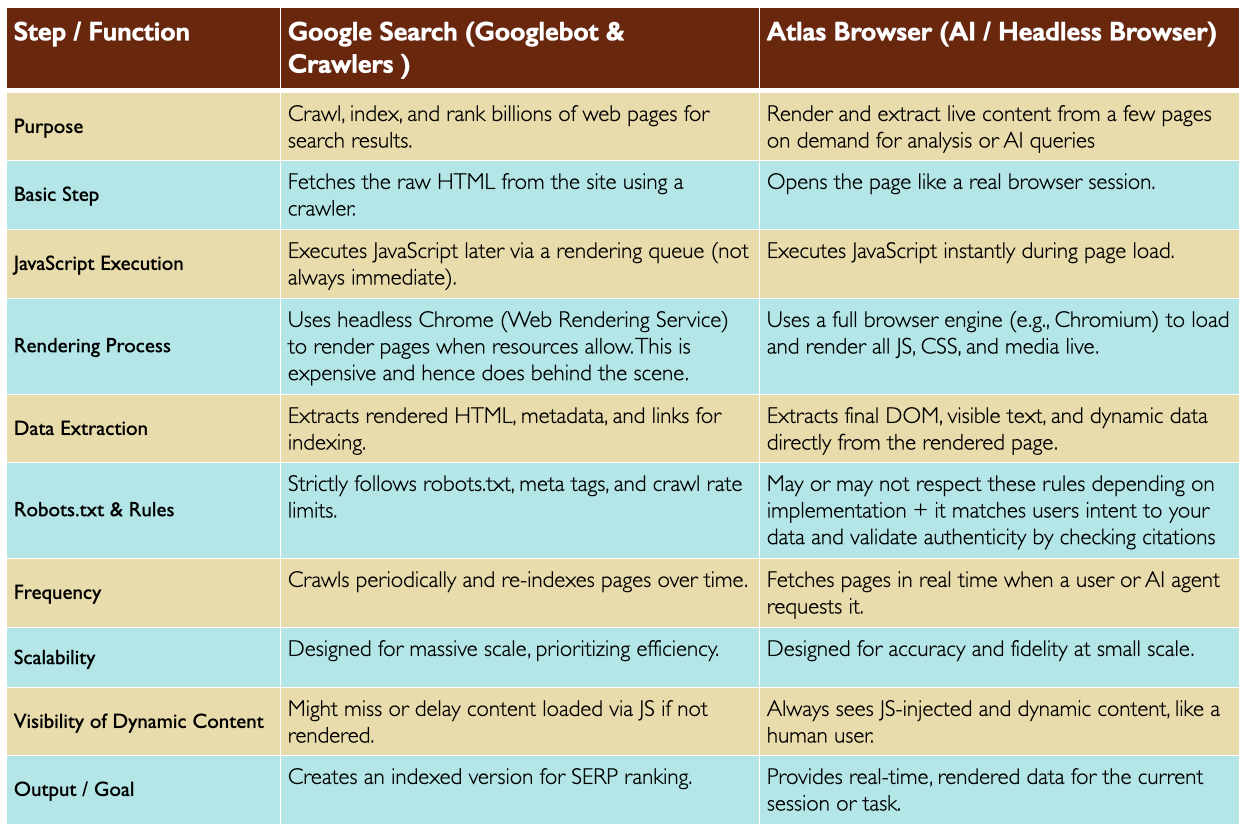

Let’s look at some of the general differences:

This is where I believe the SERP game is going to change.

When the user is searching with a specific intent and context, the normal SERP of search engines is going to fail. As tools like Atlas and similar browsers begin to interpret user intent more deeply, the real question becomes: how do you serve that data effectively?

Some quick wins could be:

It’s not just about crawlers and indexes

It used to be a world where website data was optimized for bots and crawlers, just to appear on the SERP. That game is changing. Browser agents like Atlas will now render your site, execute your JavaScript, and extract exactly the information the user is looking for.

As we discussed above, browsers like Atlas or Google AI search or any other AI tool are going to render the JS during the normal page load lifecycle and can start analyzing DOM nodes and network responses during the initial render. This means it sees the same rendered content a human user sees (including content injected by JS).

So make sure you clean up your crappy JS ASAP. Make your site is Agent-readable.

Your Website = A Repository of Information for AI Systems

We are one more step closer to accelerating the theory, which I have written in SEO & AEO: Any Different?

Make your websites ready for agents to read and interpret all relevant data. You have to structure your data properly so that the browser agents can resolve the user queries much faster. This has to go hand in hand with the performance of your frontend.

I feel that in the new world, you should be making sure your site is ready for agents to read. This doesn’t mean the traditional SEO work is not needed, but this is on top of what your were doing or what youre were ignoring.

This further strengthens my belief that :

In the near future, your websites are likely to evolve to become a repository of information for AI systems and search models rather than relying solely on direct user visits.

I’d love to hear your thoughts and ideas on this — feel free to share them in the comments or drop me a message.